Federated Learning Explained: The Ultimate Guide to Decentralized AI

DownloadIntroduction: Why Federated Learning Matters

Enterprises today face ever-growing volumes of fragmented data spread across departments, regions, and partner networks. Traditional centralized AI demands moving all that data into one place—raising privacy, governance, and cost challenges. Federated learning flips the model: instead of shipping raw data, models travel to where the data lives.

At a glance:

- Solves the “data silo” dilemma without compromising on privacy

- Enables collaborative AI across hospitals, banks, manufacturing plants, and more

- Powers faster innovation by leveraging under-utilized edge compute

Download the white paper above or dig in at your own pace below to learn everything there is to know about Federated Learning. We’ll cover everything from the fundamentals to advanced topics like federated RAG and governance best practices.

Federated Learning Explained

Click on a tab below to learn more.

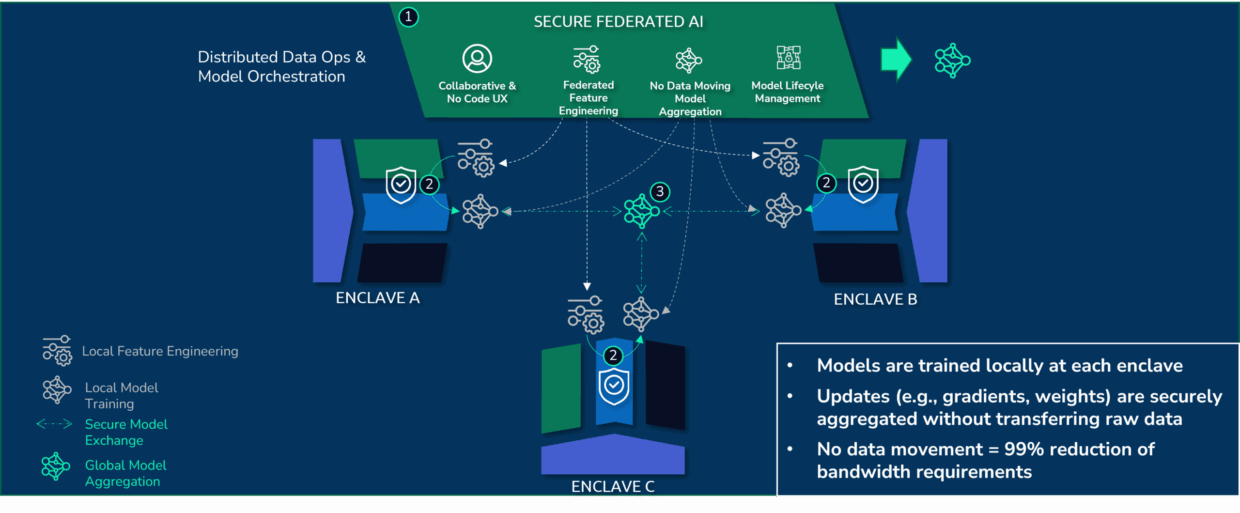

Federated learning is a decentralized machine learning paradigm that trains a shared global model using data spread across multiple clients (“federates”) without transferring that data off-site.

- Simple analogy: Imagine you’re writing a cookbook with chefs around the world. Instead of sending them all recipes and risking leaks, you send the blank pages, they add their local recipes, and send back only their additions. You merge the best recipes into one masterpiece—without ever exposing secret ingredients.

- Core promise: “Training without moving the data” preserves data sovereignty, cuts bandwidth costs, and accelerates compliance.

- In simple terms: Federated learning explained in simple terms means you get the power of a joint AI model while all participants keep their data safe behind their own firewalls.

- Initialization: An orchestration server holds the initial model parameters.

- Local Training: Each federate downloads the model, trains it locally on its private data for one or more epochs.

- Secure Aggregation: Federates encrypt and upload only model updates (gradients), never raw data.

- Global Update: The server averages the incoming updates (e.g., FedAvg) to produce a new global model.

- Iteration: Steps 2–4 repeat until convergence.

Federated learning isn’t one-size-fits-all—different scenarios require different approaches. Here are the main types of federated learning architectures and when to use them.

| Type | Data Partitioning | When to Use |

| Horizontal FL (HFL) | Same features, different users | Multi-branch bank branches with similar schemas |

| Vertical FL (VFL) | Different features, same users | Joint credit scoring between bank + e-commerce |

| Federated Transfer Learning (FTL) | Different users & features | Cross-industry collaborations with little data overlap |

Federated learning vs. centralized models isn’t “better or worse” — it’s the right trade-off when privacy, fragmented data, and governance are top priorities. Federated learning vs. centralized models isn’t “better or worse” — it’s the right trade-off when privacy, fragmented data, and governance are top priorities.

| Aspect | Federated Learning | Centralized Learning |

| Data Movement | Models move to data | Data moves to a central server |

| Privacy | High (raw data never leaves premises) | Lower (data aggregated & stored centrally) |

| Compliance | Easier for GDPR, HIPAA, CCPA | Requires heavy data governance |

| Compute | Distributed; leverages edge/partner resources | Centralized compute burden |

| Latency | Faster local inference possible | Depends on network backhaul |

Benefits of federated learning include privacy, compliance, cost savings, and faster development cycles.

- Privacy & Data Sovereignty: Raw data never leaves its source.

- Regulatory Compliance: Simplifies adherence to GDPR, HIPAA, CCPA.

- Cost-Effective Bandwidth Usage: Only model updates traverse networks, not gigabytes of data.

- Edge-Optimized Personalization: Personalized models at the device or branch level without central retraining.

- Faster Innovation Cycles: Parallel local training accelerates global convergence.

- Healthcare: Multi-hospital collaboration on diagnostic models without sharing patient records.

- Finance: Cross-bank fraud detection leveraging transactions from multiple institutions.

- Automotive & IoT: Over-the-air model updates for connected vehicles and edge sensors.

- Retail & Supply Chain: Demand forecasting across distributed stores or warehouses.

- Defense & Government: Joint planning across agencies with top-secret data enclaves.

Federated AI thrives on strong governance.

- Policy Enforcement: Role-based (RBAC) or attribute-based (ABAC) access control on who can join training.

- Audit Trails: Immutable logs of model updates and participant contributions.

- Encryption & Secure MPC: Protect updates in transit and at rest.

- Differential Privacy: Add statistical noise to ensure no single record is ever exposed.

- Fragmented data challenge solved: Distributed governance policies stay local while the global model benefits from collective intelligence.

A robust federated learning system requires five essential components working in harmony to enable secure, distributed AI training across multiple organizations.

- Federation Orchestrator: Manages rounds, tracks participant health, aggregates updates.

- Client (Federate) Node: Local data processor, model trainer, encryption module.

- Data Pipelines & Feature Stores: Virtualized and versioned datasets accessible without copying.

- Model Registry & Validation: Stores all model artifacts, metrics, and drift checks.

- Monitoring & Logging: Real-time dashboards for convergence speed, resource usage, and anomaly detection.

Successfully implementing federated learning requires navigating key challenges around data distribution, communication efficiency, model performance, fairness, and version control.

- Non-IID Data Handling: Use personalized aggregation or clustering of similar nodes.

- Communication Overhead: Compress updates; increase local epochs vs. frequency trade-off.

- Model Convergence: Warm-start from a strong global base; use adaptive learning rates.

- Fairness & Bias: Monitor per-node performance; apply re-weighting or fairness constraints.

- Versioning & Rollback: Tag every global model; support instant rollback on drift detection.

The future of federated learning is evolving toward more sophisticated applications including private LLM systems, decentralized IoT networks, standardized protocols, and fully autonomous federation management.

- Federated RAG: Combining federated learning with Retrieval-Augmented Generation for private, distributed LLM inference.

- Device-to-Device FL: P2P training loops for IoT swarms without central servers.

- Standardization & Interoperability: Open federated APIs and protocols for cross-vendor collaboration.

- Autonomous FL Systems: Self-optimizing federation that adapts scheduling, security, and aggregation strategies on the fly.

Discover How Axonis Can Revolutionize Your Business

Federated learning isn’t just a buzzword—it’s a strategic imperative for any organization wrestling with fragmented data, privacy regulations, and the need for agile AI.